As referenced in my previous post, the numbers I was getting for memory usage from Kasper’s VBA Script did not make sense to me. After taking the time to better understand how Power Pivot stores data… it became clear to me why.

Let’s look at what the script does, and does not, include… and figure out how much we care. ![]()

What is being reported?

Recall that I had discussed two types of encoding: Hash encoding and Value encoding. The former is good for strings, and also for numeric values that are really “spread out”… 1, 10000, and 1000000. Value encoding is good for when the numbers are all clustered together… 10002, 10005, 100012. (We can just subtract 10002 from each number to get to 0, 3, 10… reducing the number of bits to store the numbers, and avoiding a hash table).

Okay, back to the script. It works by querying the DMVs (Dynamic Management Views), in particular, the “size” reported from the script comes from dictionary_size field of the $system.DISCOVER_STORAGE_TABLE_COLUMNS view.

“FROM $system.DISCOVER_STORAGE_TABLE_COLUMNS ” & _

“WHERE dictionary_size > 0”

So, it is reporting the size of some dictionary. What dictionary!? Hint: It rhymes with Sash Label. Stash Fable? Mash Cable? Lash Table? Bash Gable? Wow, it apparently rhymes with a lot of things. Also, I had to search for “Gable” on the internet to remind myself what it was. I have issues.

Anyway, Kasper’s script is LITERALLY only reporting on the size of the hash table. At first blush, I was like … WHAT THE HELLS KASPER!? ![]() So…

So…

In Defense of Kasper (or his script, or both)

- He says, right on the blog entry, “The SSAS DMV used to get this information does only return the segment size of a column, this is not all the memory that is used per column but will give enough information to get an good idea of the actual usage.”

- I got a ton of value out of the script, exactly as written. Heck, the script helps me make actual money! If a client wants a consult to help performance… running this script is typically the first thing I do. It instantly gives me valuable info about what columns are eating memory — focusing our attention to those things most likely to benefit from tuning.

- The relevance of the numbers it gives are higher than you expect. Let’s expand on that…

So… we are not reporting on the size of the “actual” data in the column… for hash encoded columns we are only reporting on the size of the hash table. And for value encoded columns we aren’t reporting on… anything. It sounds bad. However…

Assuming we are looking at this to optimize performance (and if you are not, you have got WAY too much time on your hands), I submit the size of the hash table is the leading indicator of performance.

First, you have an incredibly strong correlation… the larger the hash table, the larger the amount of data in the column. I mean, the hash table didn’t get huge by NOT having lots of values in the columns, right?

Second, hash encoded columns are slower than value encoded columns. Vertipaq is an in-memory storage engine. In. Memory. Processors are crazy fast at doing math. By comparison, having to read something out of memory is slow. With hash encoding, we have to go touch memory to do a lookup of the actual value (hey hash table, I got this value 777, what is that? Oh it is “Scott is a freak”, Thanks Mr Hash Table!) The extra memory touch from this lookup makes it slower.

With value encoding, we don’t have that. We just do some math! (We see 777, we call it a liar and add 10002 to get the actual value of 10779).

So, if I am optimizing my power pivot model, and looking for performance bottlenecks… I am looking for large hash tables first. And Kasper’s script does that. And we love him. We sing songs of his greatness and build statues in his honor. Well, maybe that is over-doing it a bit, but we would totally buy him beers!

Script Updates

“FROM $system.DISCOVER_STORAGE_TABLE_COLUMN_SEGMENTS ” & _

“WHERE used_size > 0”

Where Kasper’s script just pulls from DISCOVER_STORAGE_TABLE_COLUMNS, we are going to also pull from DISCOVER_STORAGE_TABLE_COLUMNS_SEGMENTS which has a handy USED_SIZE column. This is the memory used to store the actual encode values (Hurray!)

In a perfect world we would just modify the query to JOIN these two queries together, but alas, DMVs are not “real” sql tables and JOIN is not supported.

As opposed to doing anything complicated… I just did a bit of copy pasta in the script so that we run both queries, each in their own record set, each appending to the same table. Not elegant, but it works. You won’t hurt my feelings if you make changes to make it even better ![]()

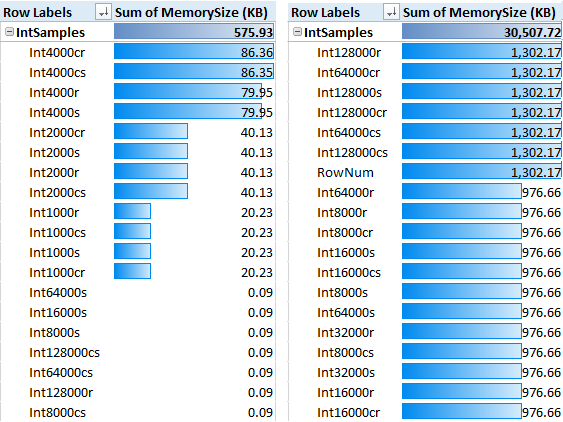

Before (left) and After (right) in a rather extreme example…

Here is the VBA Source Code

- The streak is alive! – August 10, 2017

- DAX KEEPFILTERS Plus Bonus Complaining! – July 20, 2017

- VAR: The best thing to happen to DAX since CALCULATE() – July 11, 2017

- Review: Analyzing Data with Power BI and Power Pivot for Excel – June 20, 2017

- Power BI Date Table – June 2, 2017

Scott Senkeresty here… Founder and Owner of Tiny Lizard. Yes, I have done some stuff: A Master’s Degree in Computer Science from California Polytechnic State University in beautiful San Luis Obispo, California. Over twelve years developing software for Microsoft, across Office, Windows and Anti-Malware teams… Read More >>

Hey Scott, thanks for sharing. I have tried it out and it works a treat – much more intuitive now that it contains all the data. I had a file that had 1 fact table and 4 lookup tables. I ran your code and found that my 2 columns (sales incl tax and sales ex tax) were both using up about 20% of the space each. It then immediately occurred to me that I don’t really need sales Inc Tax. I deleted it and my file size went from 126MB to 102MB, a 20% reduction – cool bananas!!

One thing I note is that the total file size reported in the pivot table is much higher than the actual file size by about 25%. Any ideas why? I thought it might be compression, but I think that PowerPivot would do better than 20% compression on my file.

Hi Matt / Scott, I have just tested this updated VBA script and with the file I used the reported size in the pivot table is about 50% higher than the actual file size. Presumably, the level of compression would be determined, to some degree at least, by the type of data stored in the tables? Would you agree?

Either way, it is a great tool to have available. Thanks

Don’t forget that the XLSX file is itself, a ZIP file containing lots of folders and files… and the powerpivot model encoded as XML. So, it’s a bit hard to wrap your head around file size… after the general XL overhead, the XML AND the compression from ZIP.

Scott, you can run Kasper’s SQL script using DAX query tables without the need of using VBA.

http://www.sqlbi.com/articles/linkback-tables-in-powerpivot-for-excel-2013/

http://www.powerpivotblog.nl/implementing-histograms-in-excel-2013-using-dax-query-tables-and-powerpivot

Scott

I tried this today at my training course and it didn’t work (Excel 2010). I think you may have said that there needs to be a different version. Is that correct? Can you post a working version for both 2010 and 2013? Or am I mistaken?

Ya know, Matt… I never really looked into 2010. I know Kasper’s script only worked on 2013. But now that you SAY it.. it look slike this is the probably the only thing holding me back… wbTarget.Model.DataModelConnection.ModelConnection.ADOConnection

which feels like a solvable problem. I’ll look into it.

Thanks for sharing.

In column B of the data table, your vba code stores :

– the ATTRIBUTE_NAME column from DISCOVER_STORAGE_TABLE_COLUMNS

– the COLUMN_ID column from DISCOVER_STORAGE_TABLE_COLUMN_SEGMENTS

I have observed that when column names contains dots characters, the two fields do not have the same value and are therefore not consolidated in the same row of the pivot table.

Luckily, DISCOVER_STORAGE_TABLE_COLUMNS can also return the COLUMN_ID. Replacing ATTRIBUTE_NAME by COLUMN_ID in the first query solve this issue.

Hi Scott,

Were you ever able to make the VBA Script work for PowerPivot in Excel 2010?

Thanks,

Josh

I am on Excel 2016 and can not run the script. The error message I get is:

An unexpected error occurred (file ‘pfthreadpool.cpp’, line 1045, function “PFThreadPoll::QueueWorkItemMultiple’).

Do you know why it is not working? Thank you

Hi Scott,

using a Minja-function we can get a pretty cool view of the memory-useage in PowerBI 😉

http://wp.me/p6lgsG-yI